Deep Learning with Google Cloud Compute: Get Setup

Here at Inqyr, we're big fans of Google Cloud. In-fact we train all our models and even host our NPS solution, Inqyr AI using Google Cloud.

This is a quick guide with a few gotchas that people may encounter when trying to train their models using Google Cloud Compute.

Quotas for GPUs

GPUs are crucial for fast and efficient training Deep Learning models. If you use PyTorch or Tensorflow, chances are you'll want to use a GPU to speed up your training.

Before setting up your Compute Instance, we suggest requesting a Quota increase from Google Cloud.

There are two quotas of note with Google Cloud:

- Regional Quotas. Google Cloud have multiple regions and by default they are set to 1 GPU per region. If you want more than 1 GPU, you'll need to request an increase for this region.

- Project Quotas. On top of Regional Quotas, there are Project Level quotas. By default, this is set to 0. So if you increase a Regional limit, you still won't be able to use a GPU because of this default limit.

To get started with Deep Learning with Google Cloud, you'll want to increase this Project Level limit initially. You can do this following the steps here.

Setting up the instance

Taking a slight step back, it may be helpful to explain what Compute Engine is.

Compute Engine are virtual machines in the cloud. They are the equivalent to Amazon's EC2. As with all cloud providers, they are incredibly flexible and can scale from small instances up large RAM and many vCPUs. For data teams, they simply provide access to an on-demand GPU.

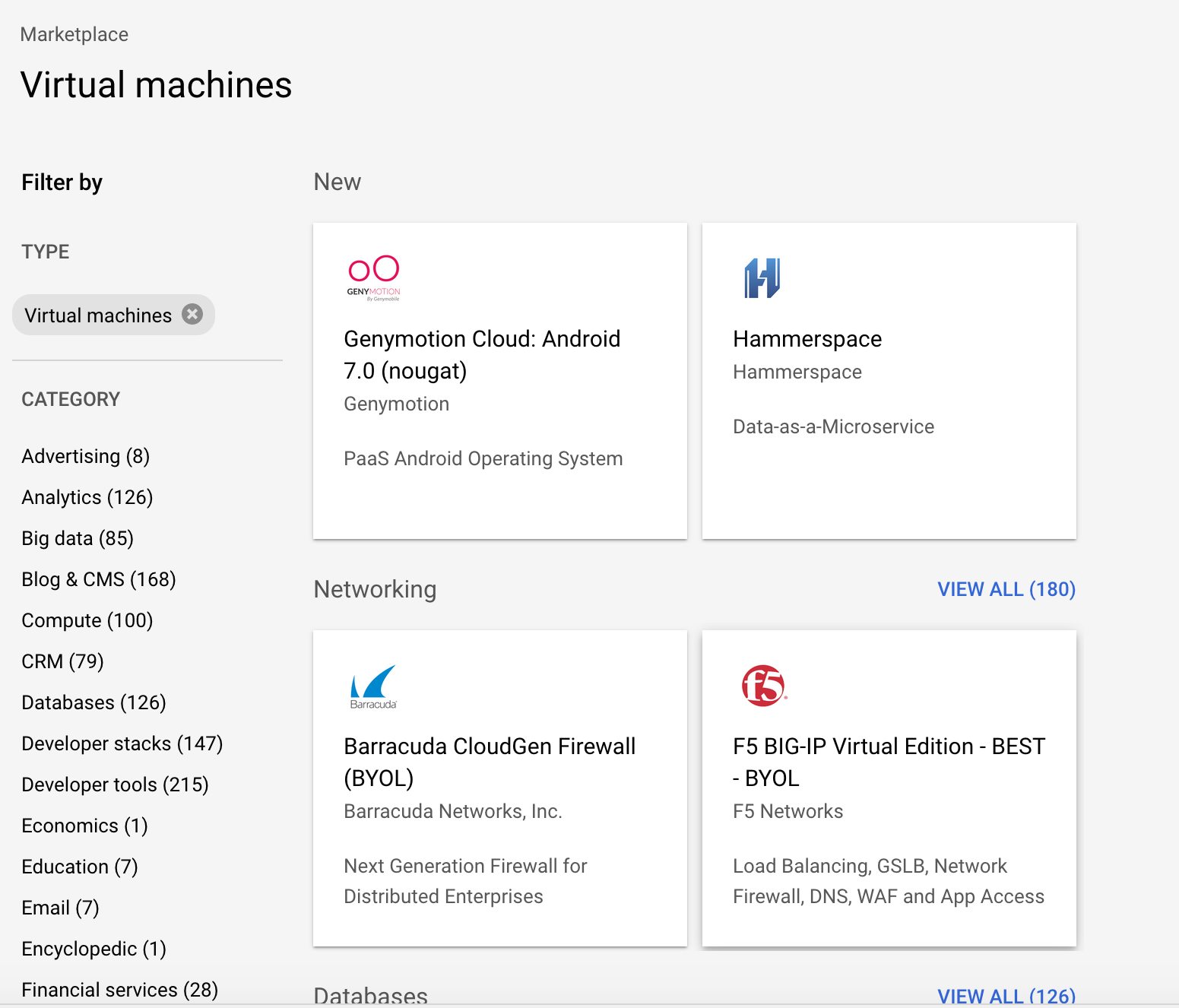

A benefit of Google Cloud is the Marketplace. The Marketplace is a collection of both Google Developed and Community developed solutions for web-services, databases, and machine learning instances.

For the most part, we suggest the Deep Learning VM Image. It is developed by Google and supports the most common Machine Learning packages, including PyTorch and Tensorflow. It abstracts a lot of the setup and is a simple Click to Deploy.

Clicking Launch Instance on the link above, will guide you through the process of adding your instance. It's relaively step through, so we won't go into details.

Connecting to your Instance

Using the Deep Learning VM Image, Jupyter Lab is already running and can be accessed from your local machine/laptop using an SSH Tunnel.

gcloud compute ssh --project $PROJECT_ID --zone $ZONE \

$INSTANCE_NAME -- -L 8080:localhost:8080In this command, we use the gcloud CLI tool to create a SSH connection between your local port, 8080 to your instance's port 8080.

This information for the environment variables can be accessed by running: gcloud compute info

If you need help training your model or with Google Cloud, get in touch with us and we'll be happy to help you out.